I’m an Associate Professor in the School of Education at the University of California, Davis, where I teach and conduct research on assessment, testing, and quantitative methods in the social sciences, primarily psychometric methods. I advise doctoral students and consult on state and federal projects in educational testing. I also conduct trainings and professional development in item writing and assessment design.

Here’s my CV.

My research interests are clustered in three main areas within the field of educational and psychological measurement.

Assessment Development

I’ve helped design and build a variety of assessments, most of them used in educational settings. I’m currently working on formative math assessments for preschoolers. The assessments will be delivered via tablet, and we’re exploring an adaptive administration based on multidimensional Rasch models. This project extends previous work on assessment of early literacy (Albano, McConnell, Lease, & Cai, 2020; Bradfield et al, 2014).

I’m also interested in improving assessment literacy, the knowledge and skills required to utilize assessment effectively in the classroom, specifically by supporting teachers in the item-writing process. This was the main motivation for the Proola web app (proola.org), which facilitates collaborative assessment development. We used Proola to build openly licensed item banks for introductory college courses in macroeconomics, medical biology, and sociology (Miller & Albano, 2017). The banks are available here in QTI format. Analysis of the results is underway. We’re examining item quality and alignment to learning objectives using natural language processing tools.

In a recent book (Rodriguez & Albano, 2017; Routledge link; Amazon link) a colleague and I outline the assessment process, from development to implementation, in the higher ed classroom.

Scaling, linking, and equating

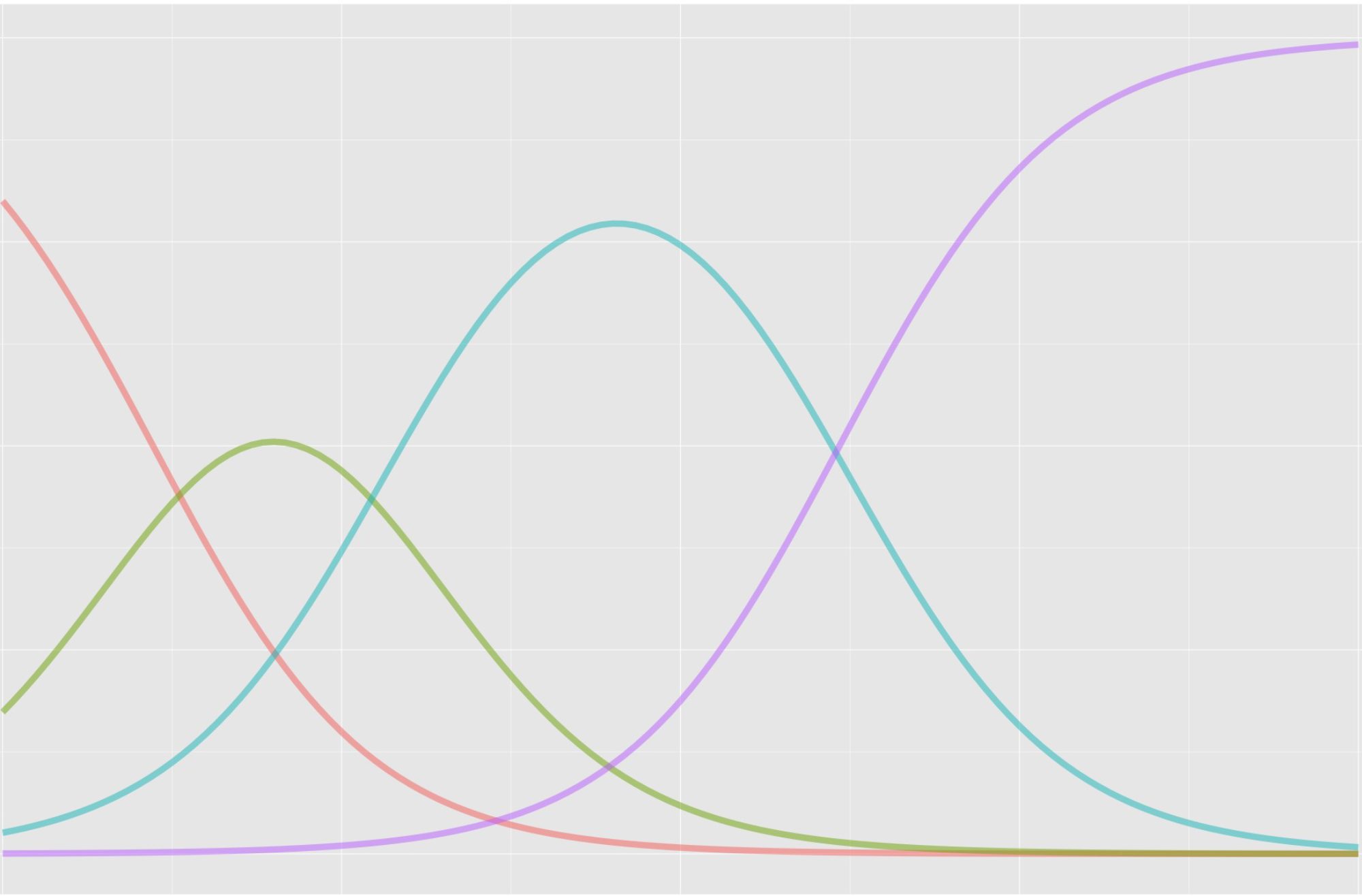

Scaling, linking, and equating are statistical methods used to build and connect measurement scales. I have an R package that performs observed-score linking and equating, and I’m interested in finding linking methods that work well with small samples, unreliable measures, external anchor tests, or in other less-than-ideal testing situations (e.g., Albano, 2015; Albano & Wiberg, 2019).

Item Analysis

I use multilevel modeling in the context of item analysis. The main goal here is to identify other variables that impact item-level performance, so as to inform and improve the assessment development process. Traditional measurement models assume that the biasing influence of extraneous variables is null or negligible. However, research shows that certain sources of bias can be problematic. Multilevel item-response models let us examine item-level bias.

Examples of variables that impact item performance are: time, causing what is called item parameter drift (Babcock, Albano, & Raymond, 2012); person grouping, e.g., special ed compared to general ed (Wyse & Albano, 2015) or women compared to men (Albano & Rodriguez, 2013), causing what is called differential item functioning; and other variables like item position, item type, response latency, motivation, test anxiety, and opportunity to learn (Albano, 2013).