Apparently, implicit association testing (IAT) has been overhyped. Much like grit and power posing, two higher profile letdowns in pop psychology, implicit bias seems to have attracted more attention than is justified by research. Twitter pointed me to a couple articles from 2017 that clarify the limitations of IAT for racial bias.

https://www.vox.com/identities/2017/3/7/14637626/implicit-association-test-racism

https://www.thecut.com/2017/01/psychologys-racism-measuring-tool-isnt-up-to-the-job.html

The Vox article covers these main points.

- The IAT might work to assess bias in the aggregate, for a group of people or across repeated testing for the same person.

- It can’t actually predict individual racial bias.

- The limitations of the IAT don’t mean that racism isn’t real, just that implicit forms of it are hard to measure.

- As a result, focusing on implicit bias may not help in fighting racism.

The second article from New York Magazine, The Cut, gives some helpful references and outlines a few measurement concepts.

There’s an entire field of psychology, psychometrics, dedicated to the creation and validation of psychological instruments, and instruments are judged based on whether they exceed certain broadly agreed-upon statistical benchmarks. The most important benchmarks pertain to a test’s reliability — that is, the extent to which the test has a reasonably low amount of measurement error (every test has some) — and to its validity, or the extent to which it is measuring what it claims to be measuring. A good psychological instrument needs both.

Reliability for the IAT appears to land below 0.50, based on test-retest correlations. Interpretations of reliability depend on context, there aren’t clear standards, but in my experience 0.60 is usually considered too low to be useful. Here, 0.50 would indicate that 50% of the observed variance in scores can be attributed to consistent and meaningful measurement, whereas the other 50% is unpredictable.

I haven’t seen reporting on the actual scores that determine whether someone has or does not have implicit bias. Psychometrically, there should be a scale, and it should incorporate decision points or cutoffs beyond which a person is reported to have a strong, weak, or negligible bias.

Until I find some info on scaling, let’s assume that the final IAT result is a z-score centered at 0 (no bias) with standard deviation of 1 (capturing the average variability). Reliability of 0.50, best case scenario, gives us a standard error of measurement (SEM) of 0.71. This tells us scores are expected to differ on average due to random noise by 0.71 points.

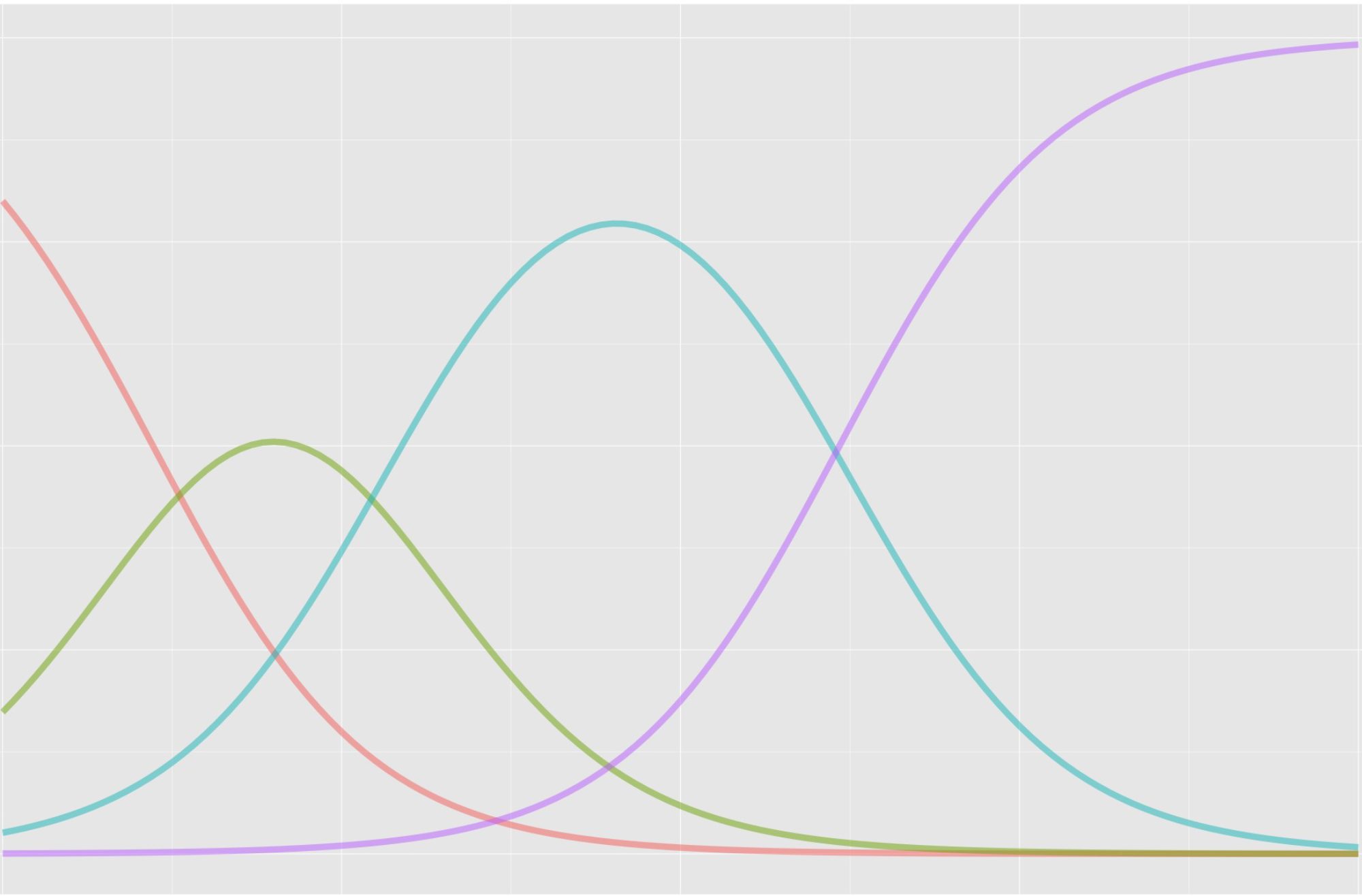

[Confidence Intervals in Measurement vs Political Polls]

Without knowing the score scale and how it’s implemented, we don’t know the ultimate impact of an SEM of 0.71, but we can say that score changes across much of the scale are uninterpretable. A score of +1, or one standard deviation above the mean, still contains 0 within its 95% confidence interval. A 95% confidence interval for a score of 0, in this case, no bias, ranges from -1.41 to +1.41.

The authors of the test acknowledge that results can’t be interpreted reliably at the individual level, but their use in practice suggests otherwise. I took the online test a few times (at https://implicit.harvard.edu/) and the score report at the end includes phrasing like, “your responses suggest a strong automatic preference…” This is followed by a disclaimer.

These IAT results are provided for educational purposes only. The results may fluctuate and should not be used to make important decisions. The results are influenced by variables related to the test (e.g., the words or images used to represent categories) and the person (e.g., being tired, what you were thinking about before the IAT).

The disclaimer is on track, but a more honest and transparent message would include a simple index of unreliability, like we see in reports for state achievement test scores.

Really though, if score interpretation at the individual level isn’t recommended, why are individuals provided with a score report?

Correlations between implicit bias scores and other variables, like explicit bias or discriminatory behavior, are also weaker than I’d expect given the amount of publicity the test has received. The original authors of the test reported an average validity coefficient (from meta analysis) of 0.236 (Greenwald, Poehlman, Uhlmann, & Banaji, 2009; Greenwald, Banaji & Nosek, 2015), whereas critics of the test reported a more conservative 0.148 (Oswald, Mitchell, Blanton, Jaccard, & Tetlock, 2013). At best, the IAT predicts 6% of the variability in measures of explicit racial bias, at worst, 2%.

The implication here is that implicit bias gets more coverage than it currently deserves. We don’t actually have a reliable way of measuring it, and even in aggregate form scores are only weakly correlated, if at all, with more overt measures of bias, discrimination, and stereotyping. Validity evidence is lacking.

This isn’t to say we shouldn’t investigate or talk about implicit racial bias. Instead, we should recognize that IAT may not produce the clean, actionable results that we’re expecting, and our time and resources may be better spent elsewhere if we want our trainings and education to have an impact.

References

Greenwald, A. G., Banaji, M. R., & Nosek, B. A. (2015). Statistically small effects of the Implicit Association Test can have societally large effects. Journal of Personality and Social Psychology, 108(4), 553–561.

Greenwald, A. G., Poehlman, T. A., Uhlmann, E. L., & Banaji, M. R. (2009). Understanding and using the Implicit Association Test: III. Meta-analysis of predictive validity. Journal of Personality and Social Psychology, 97, 17– 41.

Oswald, F. L., Mitchell, G., Blanton, H., Jaccard, J., & Tetlock, P. E. (2013). Predicting ethnic and racial discrimination: A meta-analysis of IAT criterion studies. Journal of Personality and Social Psychology, 105, 171–192.